Note: this article supposes you have already read part 1. While it shouldn’t be required, you should still give it a try.

Now that we can boot to C, we need to start working on the C library. Let’s start with memory management.

What’s needed here?

So, what is memory management? Well, it can be divided into three levels. (There may be other ways, but this is the most common one.)

The highest level, that everyone reading should know about, is heap management. This is malloc, free and the likes. This level also the hardest to get right (at least for me it was), as the other levels can be quick to make. However for heap management, since I didn’t want a lot of fragmentation a first fit MM didn’t fit my wants.

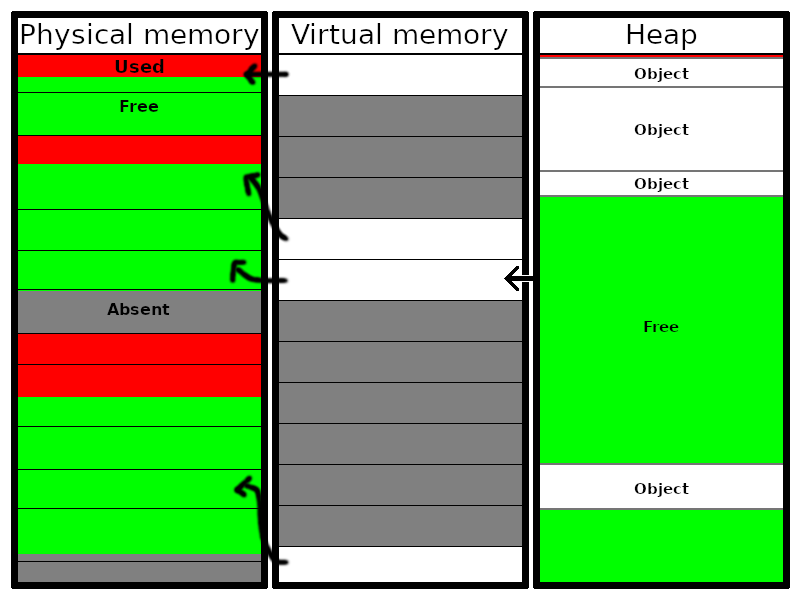

The level below is used less often, but we can still use it sometimes: it’s the virtual memory management (i’ll call it VMM later on). This is functions like mmap on Linux. This level manages the “conflict address” of two programs sharing the same addresses but having different data. In other words, this manages paging.

The lowest level, that standard programmers never use, is physical memory management (I’ll call it PMM later on). This is the level which gives the VMM actual memory to work with (paging maps virtual memory to physical memory).

When you try to call malloc, what happens then?

Well, first the function tries to grab some free space and reserve it. In case it fails because there is not enough, it will call the VMM to request some memory for the process to use. The VMM, in turn (directly or not), requests the PMM some memory to give to this process. The PMM answers with some physical address, that the VMM maps to some process-specific address, that is given to malloc, which does its thing (however it may be implemented).

That also means that, when you have multiple processes, the PMM and the VMM are the ones making sure that each processes has their own part of memory!

If the PMM gave the same address over and over again, every map is physically the same; meaning, when setting your local i in the stack to 5, you may also destroy a critical pointer in the heap!

The VMM, meanwhile, is the intermediary between the PMM and the processes. If the VMM also allowed processes to request to map any virtual page to any physical page, any process could then request every map in physical memory, accessing other processes’ memory (which is a huge security issue). It may also have other features, but those are merely extras (COW, “phantom” pages…).

Disclaimer: there might be a lot of ways to do it. I chose to do an unusual way, at least for heap management (also, it’s quite hard to find actual implementations for the other levels which are also documented and/or simple to understand).

Physical memory management

What do we need to do at this level?

There are multiple options:

- Just give memory when requested, wherever it may be

- Manage things like cache lines: make sure that they aren’t trashed as soon as tasks are switched, and so on and so forth.

Now, since it’s my first time, I didn’t bother with this and just went for the first option. However, there are still things to consider:

- A driver may want some blocks of memory that are contiguous to one another.

- Some drivers may want memory only in the lower memory.

- Segmentation is bad.

After reading some docs about the subject, I decided to copy (a very little bit) the way the Linux kernel does it, the way I understood it. (It may not be what it really is, but it works.)

First, when initializing the MMs, you give a free (virtual) page to initialize the PMM. This is so we can put things in this page, without worrying about running out. Next, you give the free memory addresses that were provided by the multiboot bootloader, and remove addresses that you want to reserve (kernel, memory-mapped IO/registers…).

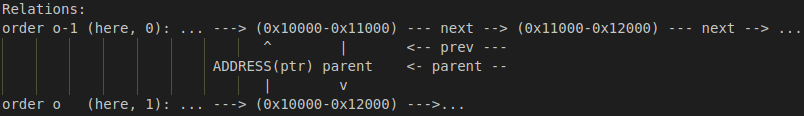

Then, at the beginning of the page is an array of block_t, which is (at least for now) only a pointer to a block_list_t. Like on the Linux kernel, this is a doubly-linked list (though I didn’t implement it the same way). It also contains a parent pointer and, for compactness, a uintptr_t ptr element.

struct block_t {

struct block_list_t {

struct block_list_t *prev;

struct block_list_t *next;

struct block_list_t *parent;

uintptr_t ptr;

// ptr can point to a page or the first block_list_t of a pair.

// Use the macro ADDRESS to get the actual address.

} *list;

} (*blocks)[MAX_ORDER];You might be wondering at this point, “nice, but why?” Well, here’s how the algorithm works.

Allocating physical memory

- The array of

block_ts is the start pointer for a list of blocks at a given order. This means that each element of the block list actually represent 2order contiguous pages. - When requesting (a) physical page(s), you also provide the order you want. This means that, if the algorithm returns a non-null value, you’ll have 2order contiguous pages. You also provide other stuff, such as in which memory would you prefer it to be at (lower or upper).

- If there is a free block with the correct order, then the algorithm marks it as used and returns it. The only exception is that the PMM always keep an order 0 block free for its personal use.

- However, if there is not a page of the correct order, the PMM requests a page of a higher order. This block is split into two halves, two 2order pages that are called “buddies” in the Linux kernel. This may happen recursively as well. The “parent” block is then marked as split and made to point to the left buddy, and the two below are linked to their parent through the “parent” flag.

Now, an interesting thing to notice is that there is no flag field for the blocks. Indeed, there is a workaround.

Remember the ptr element? Well, this is where alignment comes in.

A page is always (at least) 212 bits aligned (on x86 and x86_64): the lowest 12 bits are unused. Alternatively, the alignment of a block_list_t is 4: the lowest two bits are unused. This is why, those bits are actually used as flags! The least significant bit differentiate between a pointer to a page and a pointer to the left buddy, and the 2nd least significant bit is used as the other flag! This, however, requires to be extra-careful when allocating a new block. But it works!

Now, allocating is nice, but sometimes we need to free memory too…

Freeing physical memory

Freeing memory is approximately doing the opposite of allocating.

First, you mark the page as freed. However, now you need to check if the page was in a buddies pair. If it was, then check for the other block: if both are free, the you can actually merge them back! Somehow deallocate the buddies pair (I have a simply-linked list of such blocks that are used before allocating new blocks, to avoid useless memory allocations), mark the parent as a whole page that is also free, and reiterate the process if the parent itself has a parent.

This may seem simple at first glance, but you’ll probably have some problems somewhere in this step at some point.

Now, just add some functions for managing memory-mapped registers and so on, and you’re good to get to work on the next level!

Virtual memory management

Now that you can allocate and deallocate physical memory, you need to map it in the virtual space.

First, make sure that you can make a direct allocation and deallocation of virtual memory at given addresses to some given physical memory addresses. This may be useful for memory-mapped registers.

Now, how I did it (which is not the “standard” way of doing it, according to what I’ve read) is to have a function to allocate virtual memory at a given address for a given number of pages (along with some given flags). I forbid to allocate NULL and a page in a Page Table, check if allocation is doable, then do the allocation. Note that in this case, physical continuity is absolutely not guaranteed.

Then I have a function to allocate “near” a certain address while staying in a certain range of address, which simply repeatedly try allocating addresses starting from the hint, and a function to allocate in a certain address range (which is an allocation “near” the beginning of the range).

Freeing is quite simple, just remove the entry in the page table.

Now, this is a very basic VMM. What could be done next is:

- In case of a page fault, check if the page really is absent. If it is actually present, then you need to invalidate the TLB, then return from the exception. This is caused by paging being cached.

- When deallocating a page, emit an IPI (Inter-Process Interrupt) somehow (for instance, through the APIC!) to message the other CPUs to invalidate their TLB.

- When allocating a page, don’t physically allocate it immediately! Wait for a page fault before doing so.

- When you run out of physical memory, swap pages to disk, then retrieve them on page faults.

Those are only some things left to do in my VMM. Of course, until you enable multiprocessing, IPIs are useless, and at the start, copy-on-write can be the source of hard to find bugs. That’s why, you should first focus on moving forward before coming back, when you notice this is starting to become a bottleneck or if you want to add some features.

Heap management

Speaking of features, now is the time to make malloc!

Now, I know that in kernels, this function is called kmalloc and that there are probably differences, the problem is that I couldn’t really find those differences (and the ones I read about were features I didn’t want). So, plain malloc it is.

However, I find the C standard on memory allocation quite… strange. Why does malloc only has the size of the buffer as its parameter, but calloc has two? What’s the use of knowing the size of one element, when you need to clear its entirety anyway?

So, my heap contains the size of each element as well as the number of elements. I also added an alloc function which does what malloc does, but with size info added, and remalloc and recalloc which changes the number of elements (and clear newly allocated elements for the second one). Of course, malloc, calloc and realloc all do what the C standard say they do (and both malloc and realloc set the size of one element to 1).

(Note after the article is done: I noticed, while working on my pull request in box64, that OpenBSD actually has a reallocarray that is near what my remalloc is for… The more you know!)

Allocating elements

Inside, what malloc, calloc and alloc start do are the same thing: allocate some amount of contiguous memory. So how do we do that?

I chose to do a header-based best-fit heap manager. The first thing I have is the heap start. (If you look very closely at the diagram above, you’ll notice there is a red block in the “heap” column: that’s what it represent.) This is a free header containing 0 free byte. Then each free header contains a pointer to the next free header, sorted by increasing size.

If I find a perfect match, I remove it from the list, mark it as used and return the corresponding block. If I find a block big enough (meaning, it is big enough to hold the requested memory, a new header and at least 8 bytes), then it is removed from the free chain, marked as used, a new header is made after the allocated block and the allocated block is returned.

If no match is found, then we need to allocate some contiguous pages using our VMM, and add two headers to those pages (at the beginning of the first page for an “used” block header, then a “free” block header after the newly allocated block).

One thing to notice is that with this scheme, buffer overruns are extremely dangerous. Indeed, after each free block is an used block header, and after each used block is another header (except, of course, for the last header of each page). So if a buffer overrun occurs, the header metadata is immediately destroyed.

I personally added a signature field that contains a fixed value (‘hHDR’, heap header), and a function to check the signature of all headers in the free chain. If a mismatch is detected, _panicp (kernel panic with pointer) is called.

struct heap_header {

uint32_t sig;

union {

struct heap_header *next;

size_t isz; // 1-(size_t)(-1) for used (with given item size)

};

size_t totsize; // total free block size, item size if block used

} *heap_start;Adding this a bit later: while working on box64, I noticed that glibc actually has an equivalent function: mcheck_check_all (which also requires a call to mcheck/mcheck_pedantic). The more you know!

Reallocating elements

Now, we can allocate elements. To reallocate elements, some libraries do a malloc/memcpy even if this is unnecessary. For my OS, I decided to only resize the allocated memory if possible. As such, the steps are:

- If reallocation increases size,

- Acquire next header if it is in the free chain

- If I have the header and if the buffer available is big enough, resize in-place (move the next header, etc)

- Else, allocate memory somewhere else, move (

memcpy) memory, free last pointer.

- If reallocation doesn’t change size, do “nothing”

- If reallocation reduces size,

- If there is no next buffer and space added is too small, allocate memory and

memcpydata - Else, reduce allocated buffer and create a new free header (eventually removing the old one).

- If there is no next buffer and space added is too small, allocate memory and

Seems easy enough, but while reading myself to write this article I noticed at least two bugs inside…

Freeing elements

Now that we used our heap, we could want to free it.

That’s quite easy: detect if there is a free block before and/or after, and merge the blocks. Don’t forget to remove those blocks from the free chain to reinsert them: we still want an ordered list for a best fit memory manager!

One last thing

Personally, I marked all functions in the memory management as “warn me if I ignore the return value” (the GCC warn_unused_result attribute). While checking for the return value of the fixed allocation of a virtual page to a physical page may seem useless, this call may still fail in some cases: pages not aligned (I don’t align them as it means there has been a problem upstream), NULL mapping or page already allocated are the three ways this function can fail. I’d rather make my kernel _panic and think about why such a thing occurred than having to debug 5 hours after booting why the letter “a” isn’t displaying properly (though, I doubt it’ll be one day as complete as that… and even if it is it would rather crash, but you get the idea).

If you have any suggestion for the format or what to write on next, please let me know in the comments.

One reply on “A peek at OS-deving part 2: memory management”

Deep dive on wrappping libs would be nice next article.